Hey, kid

[¬self-improvement ≠ self-destruction] ∪ [Self-improvement ≠ ¬self-destruction] ∪ [Self-improvement ⊆ Self-destruction]

recognize you

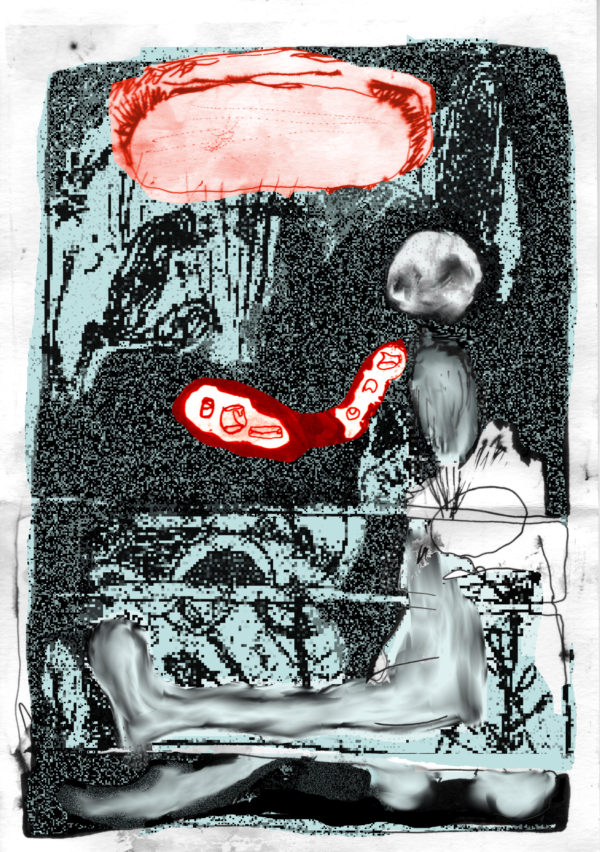

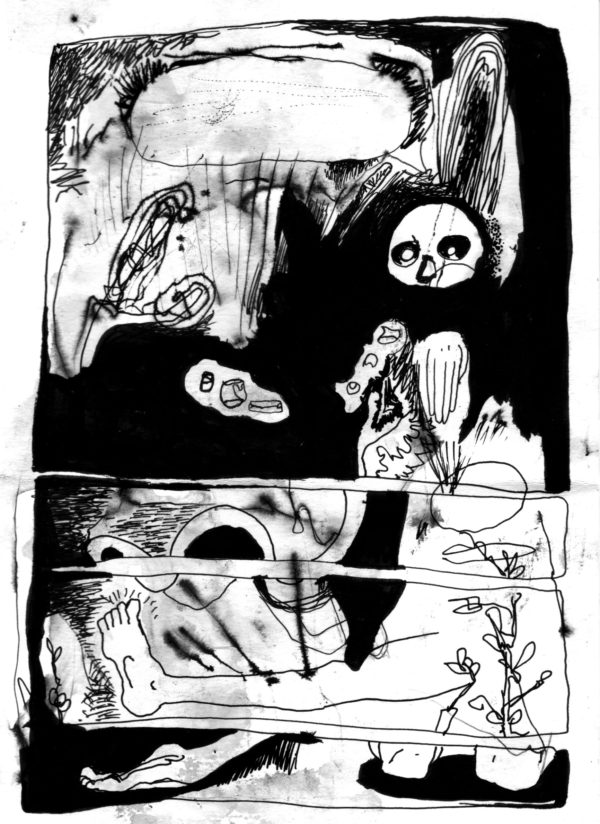

I made these two A5 ink drawings and then treated one of them. This treatment was inspired by The Flame Alphabet.

Saturated. The Rest.

Saturated. The Rest. is a collaboration between these amazing humans/techgnomes: Pierre Alexandre Tremblay, Sylvain Pohu, Patrick Saint-Denis, and Rodrigo Constanzo. The following 4 videos I made focus on different aspects of the project.

The creative process behind the Black Box Project. released Jan. 9, 2017

A quick and dirty 2 minute introduction to the project. released Nov. 2016

Tech Feature: the design of an instrument. Sept. 2016

Showing something of the beginning of the Black Box project. July 13, 2016. (only editing by me. Shot by Rod and PA)

So many nails piercing the flesh, so many forms of torture

I manipulated this video that Daniela Amortegui and Alex Grimes recorded. I time-stretched, color-fiddled, edited/reorganized Daniella’s reading of text she had written. Isolating her breath(s) was really important to me too. Then I added text to the video as well, since I knew that during the performance it would be the piano speaking* for her, which can be difficult to understand. I also played with shifting the text and the the video so they’re not always synced and at times I’ve added my own imaginary text. I wanted to treat this like poetry. Then Alex thought it would be a cool idea if we obfuscated some of the text and so we did that too. This was played/performed at the Pianos Without Organs Festival on Oct. 9, 2016 in Raleigh, North Carolina (the best Carolina).

*curious about how a piano can speak? Watch this video I made about a Robotic Piano workshop: Continue reading »

Videos for YSWN (Yorkshire Sound Women Network)

There are 6 videos in this post.

Over the years, I’ve worked quite a bit with the Yorkshire Sound Women Network. Though I was behind the lens, I absorbed much at each workshop and event they put together–not the least of which was the enthusiasm these events generated. YSWN is an organization that actively encourages girls and women to gain competency with sound equipment and music making. Here is a collection of those videos (my favorite is the Noisey Toy Assembly video below. You’ll see why).